"AI Scientists" - New Collaborators for Rare Disease Research?

In the quest to understand and treat the over 6,000 rare diseases, researchers encounter a number of limitations – in the amount of available data, access to interdisciplinary expertise, and time that can be spent on research.

Could a new breed of “AI scientists” help bridge gaps in rare disease research?

Recent advances in artificial intelligence have given rise to experimental systems known as "AI scientists" or "AI co-scientists" – research agents that carry out complex scientific tasks with a degree of autonomy. Unlike traditional AI tools that perform narrow functions, for example, an algorithm that analyzes genetic data or predicts molecular structures, these AI scientists are designed to collaborate in the scientific process itself. They can read and summarize literature, generate hypotheses, design experiments, and assist with writing papers. Initial tests of such systems have yielded mixed responses from human scientists. While some praised the time saved and even reported instances of the AI producing novel insights, others questioned whether the hypotheses and analyses that AI scientists generated were truly novel or simply iterations of existing knowledge.

The evidence on real-world use of AI scientists is currently sparse and often anecdotal. Still, they could have great potential to complement other AI-based research initiatives, especially in underserved areas of research such as rare diseases. While rare diseases collectively affect over 300 million people worldwide, the disease mechanisms of individual rare diseases are often poorly understood, hampering the discovery of diagnostic tools and treatments. Rare disease patients often face significant delays in getting a correct diagnosis, and around 95% of rare diseases lack any approved treatment. The research challenges for rare diseases are inherent: fewer patients and limited funding often result in sparse data.

Are AI scientists suitable tools to bridge gaps in rare disease research by synthesizing knowledge from disparate and interdisciplinary data sources to generate disease hypotheses, and translate those into the discovery of new diagnostics, biomarkers, and therapies?

|

Rare Disease Research Challenge |

Potential "AI Scientist" Capability |

|

Sparse and fragmented data |

Knowledge synthesis from multi-modal sources |

|

Siloed specialist expertise |

Simulation of interdisciplinary agent personas |

|

Long discovery timelines |

Hypothesis generation & protocol drafting in minutes |

|

Lack of funding for wet lab experimentation |

Cost-effective in silico pre-screening (with potential to efficient coupling to automated laboratories) |

|

Underexplored repurposing opportunities |

Novel disease hypotheses with suggested drugs and repurposing opportunities. |

Table 1: Outlining rare disease challenges and how AI scientists might tackle them

A New Generation of "AI Scientists"

While there is ongoing debate about the disruptive potential of AI in scientific discovery and drug development, the technology has already arrived in the day-to-day world of science, with large numbers of AI-aided (often low-quality) papers flooding the scientific literature and reviewers employing generative AI tools to evaluate manuscripts. However, as indicated by a recent survey, the majority of researchers use generative AI mainly for writing assistance and other simpler writing-related tasks such as literature summaries or citation management.

Going beyond that, a number tech companies, startups like Sakana AI or Future House, and academic groups, for example from Stanford and Shanghai, have begun developing more ambitious AI-based research tools. These so-called “AI scientists” or “co-scientists” are designed to collaborate with scientists by autonomously carrying out research activities, from proposing scientific hypotheses to running in silico experiments. Prominent examples include Google’s AI "co-scientist," powered by its Gemini 2.0 model, and Stanford’s "Virtual Lab," built on OpenAI’s GPT-4. These science-focused AI agents typically combine a Large Language Model (LLM) with external tools or databases. Human researchers can query the agent systems with instructions in natural language and add relevant background information, such as scientific papers or data. The AI agents then “discuss” in order to tackle the problem the human researcher outlined and provide a desired output in the form of a report. This could include, for example, a formulated research hypothesis for the cause of a rare disease, suggestions for drugs that could tackle it, and a research protocol for testing the hypothesis in the laboratory.

Google’s AI co-scientist uses a multi-agent architecture, where multiple specialized agent modules (for idea generation, critique, experimental design, etc.) iteratively refine a scientific hypothesis through a self-critical feedback loop, including ranking tournaments for hypothesis selection and evolution for improvement of quality. Stanford’s Virtual Lab similarly assigns distinct agent "personas" such as a Principal Investigator who leads the “discussion”, a Critic to question ideas, and different scientist agents with domain expertise, to simulate an interdisciplinary discussion.

While researchers are still trying to figure out optimal configurations of the number of agents and rounds of “discussion”, early tests suggest that such multi-agent systems can produce research outputs that experts find slightly more novel and impactful than those from single large models.

However, like all LLMs, the models powering these AI scientists are prone to hallucinate, which is still true for reasoning models like OpenAI’s DeepResearch and for multi-agent models like Google’s co-scientist – even though they seem to hallucinate less than earlier LLMs.

Moreover, the models lack world knowledge and context. They don’t know the how and when of things – which can make it difficult for the models to evaluate the quality and relevance of information from different sources, something that was noted by members of the scientific community when testing OpenAI’s DeepResearch.

These issues underscore the need for human oversight of AI scientist systems. While the AI system independently processes literature and databases, and proposes hypotheses and experimental plans, human scientists must assess the relevance of ideas, catch mistakes, and add context that AI systems lack. Indeed, most experts see today’s AI co-scientists as time-saving aids that can help scientists make interesting connections faster, but not as tools to make truly autonomous or novel discoveries.

However, in the rare disease space with limited data, funding, and few disease experts, saving time and broadening expertise could still make a highly valuable contribution to research.

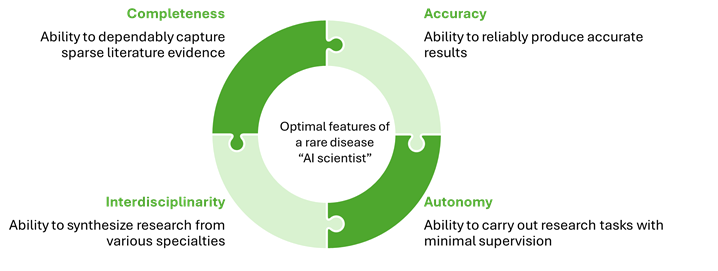

Figure 1: Optimal features of an "AI scientist" for rare disease

Interdisciplinary Collaborators for Rare Disease Research?

Both Google and Stanford emphasize interdisciplinarity as a strength of their AI scientist systems. In the rare disease field, such breadth could be extremely valuable. The phenotypes of rare diseases are often dispersed and inconsistent, with the same disorder manifesting differently depending on a patient’s genetic background or environment. Many rare diseases also affect multiple organ systems.

Understanding the full picture of a rare disease can require piecing together clues from multiple specialties. However, specialized expertise in the rare disease space is often siloed. Thus, researchers frequently face what Google’s team calls the "breadth vs. depth" conundrum: they need highly specialized, niche knowledge on top of broad, cross-disciplinary insight to make progress.

AI systems like Google’s co-scientists and Stanford’s virtual lab could address this need for highly specialized, collaborative insight by simulating an "on-demand" interdisciplinary research team, bringing together knowledge that might otherwise be inaccessible to an individual scientist. For instance, a researcher investigating a rare metabolic disorder might not have a geneticist or a structural biologist on hand – but they could brainstorm with AI agents "trained" in those specialties, who interface with relevant software, to suggest new hypotheses, research protocols, and even molecule designs.

There are, however, several challenges to this use of "AI scientists" as "interdisciplinary collaborators". Hallucinations and missing context can make it hard for non-experts to interpret the AI system’s outputs and spot errors and misjudgments. A rare disease researcher asking an AI "neurologist" agent for advice will get a list of plausible-sounding suggestions. However, the further the researcher’s expertise is from the "AI expert’s" the harder it will be to distinguish useful suggestions from irrelevant or erroneous ones. This could lead the rare disease researcher down wrong paths, wasting time on literature review and experimentation. This challenge might be exacerbated by the sparse amounts of literature that are available in the space. However, early testers of Google’s co-scientists reported that the tool also picked up on ideas for which sparse literature was available – such as a potential drug that could be repurposed for liver fibrosis.

Interdisciplinary discourse, however, is more than reading through lists of scientific advice. It also includes a level of serendipity, of chance encounters that spark a researcher’s ingenuity. It remains to be seen if the rigid format of interacting with the AI system and the (currently) mainly text-based interaction can stimulate scientists’ creativity in a similar way that, for example, a discussion on a scientific conference would. Also, cultural factors might hamper the interdisciplinary discourse with AI scientists. The underlying LLMs are generally programmed to be helpful and non-controversial. Science and medicine, however, are disciplines that thrive on discourse and disagreement, which can spark new hypotheses and experimental avenues. Programming more argumentative „AI scientist“ personas could potentially help stimulate scientists’ creativity in a scenario akin to being asked a difficult question by a supervisor in a lab meeting or by a competitor while on stage on a scientific conference.

"AI Scientists" in Drug Discovery and Repurposing

One of the most promising applications of AI in rare disease research is in drug discovery and repurposing. A number of AI-centered biotechs, such as Insilico or Recursion are employing artificial intelligence for drug discovery and development in the rare disease space. Other players like UK-based startup Healx and non-profit EveryCure are exploring the potential to repurpose existing drugs for rare conditions. Notably, such repurposing strategies have the potential to identify life-saving treatments for individual rare disease patients. Researchers at UPenn, who are connected to EveryCure, used an AI system for analyzing ~4,000 approved drugs to find a treatment for a patient with idiopathic multicentric Castleman’s disease a lethal rare condition. The AI suggested treatment with the TNF-a inhibitor adalimumab. Two years after beginning treatment the patient, who had been set to enter hospice care before, was still in remission.

"AI scientists" like Google’s co-scientist have also been tested on cases of drug repurposing, for example, suggesting new repurposed candidates for acute myeloid leukaemia that were shown to inhibit tumor cell growth in laboratory tests. Similar types of drug repurposing analyses could be carried out for various rare diseases. Moreover, "AI scientist" could be linked to AI models for drug repurposing that are already employed in the rare disease space. For instance, a knowledge graph model might output a list of promising drug candidates for a rare disease, while the "AI scientist" could deep dive on those candidates to generate testable disease hypotheses, and suggest experiments for the researchers to explore.

At the current stage, "AI scientist" systems operate in the in-silico realm, where the AI makes suggestion and it’s up to a human researcher to test them. Biotech companies like Recursion are already employing automated high-throughput lab experiments for rare disease research. In an iterative feedback loop the experimental data is then used to train the AI and the AI suggests further experiments. Genentech’s Aviv Regev, who is exploring such a virtuous cycle of experimentation and machine learning with their partner Nvidia calls it the "Lab in the loop." Coupling such automated laboratory systems to "AI scientists" could allow human researchers to interface with this automated cycle by extracting interpretable disease hypotheses and inform new research hypotheses. Such an approach could have the potential to significantly shorten the time needed to go from a question ("what causes this rare disease?") to testable hypotheses and initial data generation, where the human researcher acts as a supervisor for the AI system.

The Future of "AI scientists" in Rare Disease Research

It’s early days for agentic AI systems in science (and elsewhere), and predicting their ultimate impact is difficult. "AI scientists" might remain at the level of time-saving tools with output that needs verification by an expert eye, or they might be integrated into research to contribute to development of novel disease hypotheses. For rare disease researchers, they might at least prove a worthwhile tool for quickly scanning interdisciplinary research and helping translate this into disease hypotheses. There might be special promise to use "AI scientist" for drug discovery and repurposing – especially if the "AI scientist" systems can be coupled with automated labs.

However, we will need further tests to see if the autonomy and accuracy of "AI scientists" will be sufficient to integrate them into the daily business of rare disease researchers.

References

- https://research.google/blog/accelerating-scientific-breakthroughs-with-an-ai-co-scientist/

- https://arxiv.org/abs/2502.18864

- https://www.nature.com/articles/d41586-024-02842-3

- https://sakana.ai/ai-scientist-first-publication/

- https://www.futurehouse.org/research-announcements/launching-futurehouse-platform-ai-agents

- https://www.nature.com/articles/d41586-025-02028-5

- https://www.biopharmatrend.com/post/793-ai-for-treating-rare-disease/

- https://www.thelancet.com/journals/langlo/article/PIIS2214-109X(24)00056-1/fulltext

- https://www.nature.com/articles/d41586-025-02241-2

- https://www.nature.com/articles/d41586-025-00894-7

- https://www.nature.com/articles/d41586-025-00343-5

- https://arxiv.org/abs/2410.09403

- https://www.nature.com/articles/d41586-024-01684-3

- https://www.biorxiv.org/content/10.1101/2024.11.11.623004v1

- https://www.nature.com/articles/d41586-025-01485-2

- https://www.science.org/content/blog-post/evaluation-deep-research-performance

- https://www.nature.com/articles/d41586-025-00377-9

- https://www.nature.com/articles/s41598-025-93794-9

- https://www.nejm.org/doi/10.1056/NEJMc2412494

- https://pharmaphorum.com/news/genentech-nvidia-partner-lab-loop-ai-platform

- https://www.recursion.com/

Topic: AI in Bio