Google Expands MedGemma Collection With Multimodal Health AI Models for Open Development

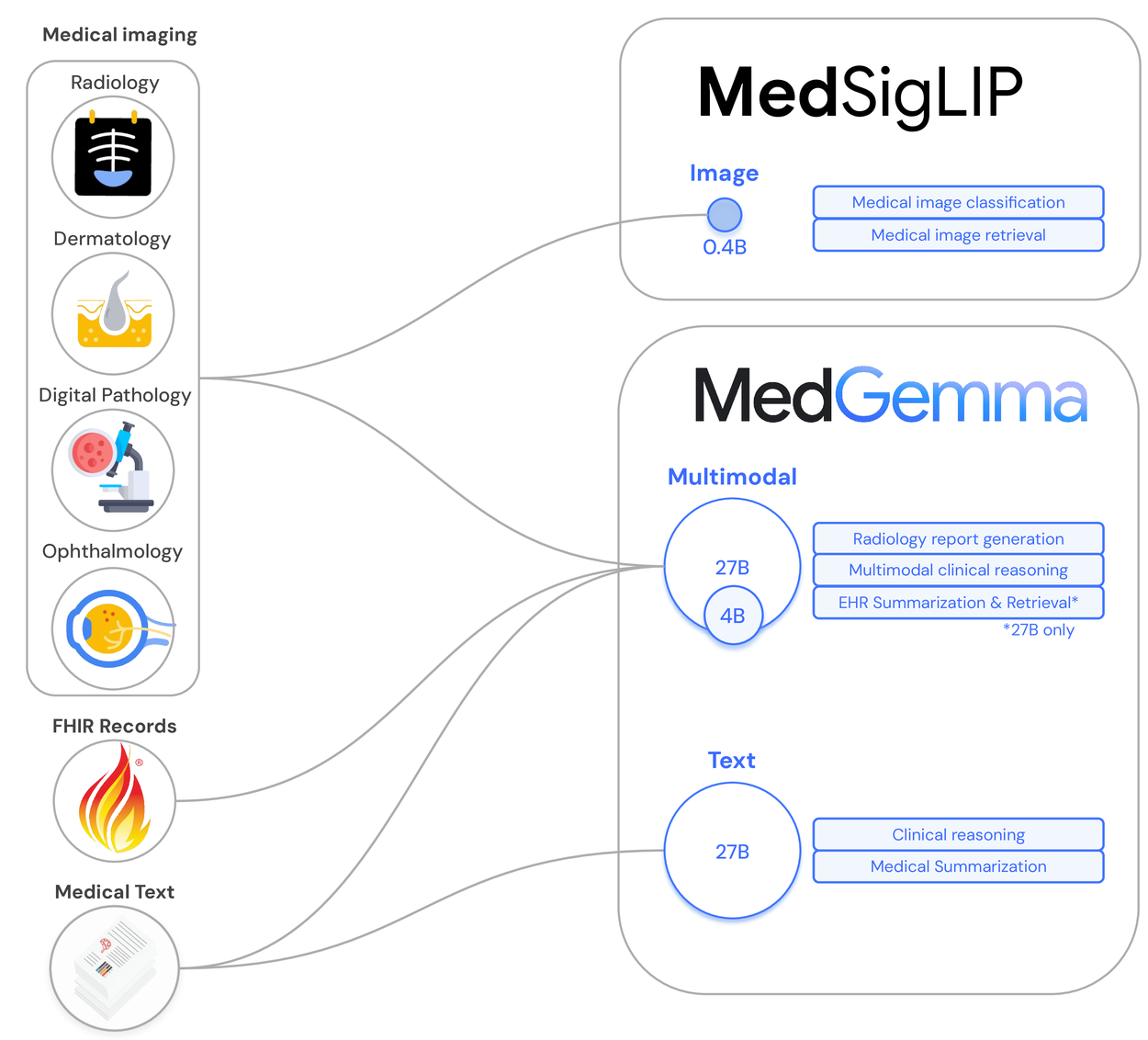

Google Research has released two new models—MedGemma 27B Multimodal and MedSigLIP—as part of its open-source Health AI Developer Foundations (HAI-DEF) initiative, expanding the MedGemma suite of generative models tailored for medical imaging, text, and electronic health record (EHR) applications. These models are intended to support privacy-preserving, locally deployable AI tools across the healthcare and life sciences sectors.

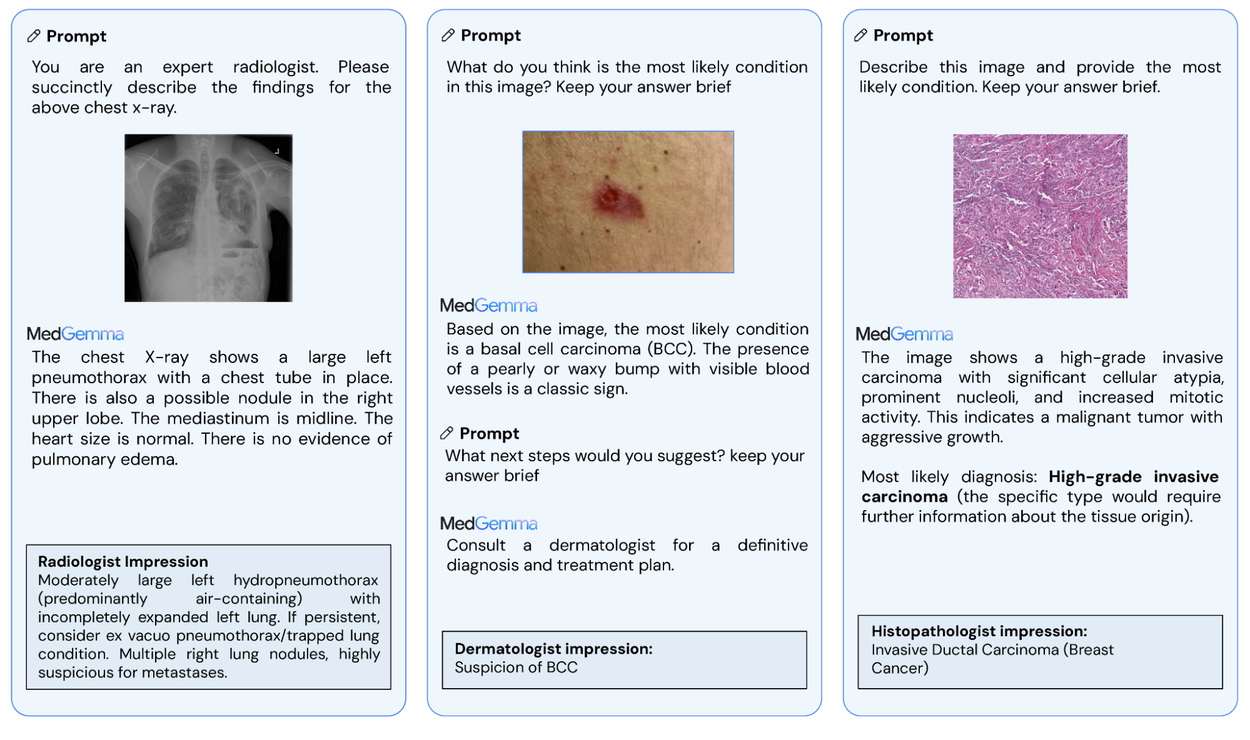

MedGemma 27B Multimodal builds on earlier MedGemma releases by supporting joint reasoning across longitudinal EHRs and medical images. It achieves 87.7% on the MedQA benchmark, placing it within 3 points of DeepSeek R1 (a larger model) at one-tenth the inference cost, according to Google. Its 4B variant achieves 64.4% on MedQA and generated chest X-ray reports that a US board-certified radiologist judged to be sufficient for patient management in 81% of cases.

MedGemma prompt examples; Google

MedSigLIP, a 400M-parameter encoder derived from the SigLIP architecture, was trained on diverse imaging modalities including chest X-rays, histopathology, dermatology, and fundus photos. It supports classification, zero-shot labeling, and semantic image retrieval across medical datasets, while retaining general-purpose image understanding.

According to Yossi Matias, VP and Head of Google Research, early adoption examples include:

- Tap Health (India): testing MedGemma’s reliability in clinical-context-sensitive tasks;

- Chang Gung Memorial Hospital (Taiwan): studying its performance on traditional Chinese-language medical literature and queries;

- DeepHealth (USA): evaluating MedSigLIP for chest X-ray triage and nodule detection.

All MedGemma models are designed for accessibility: they can run on a single GPU and are compatible with Hugging Face and Google Cloud’s Vertex AI endpoints. The 4B variants can be adapted for mobile deployment. Google emphasizes that while these models offer strong out-of-the-box performance, they are intended for adaptation and validation in domain-specific settings, not direct clinical use.

Click here to access Google's technical report.

Topic: Tech Giants