FutureHouse Debuts Scientific Reasoning LLM Model Trained for Chemistry

FutureHouse has released ether0, a 24B-parameter open-weight language model specialized for chemistry tasks. Built on Mistral 24B Instruct and refined through reinforcement learning, the model is designed for molecular generation under natural language constraints. ether0 is now available under an Apache 2.0 license and has been integrated into FutureHouse’s experimental chemistry agent, Phoenix.

ether0 is part of FutureHouse’s larger goal to move beyond knowledge retrieval and toward AI systems that perform domain-specific reasoning. While large general-purpose LLMs (like o3 and Opus 4) can explain chemical concepts, they often fail at basic manipulation of molecules like miscounting atoms, proposing implausible structures, or failing at generative tasks. The team’s motivation was to solve this by training a model that can reason over and generate chemical structures from scratch.

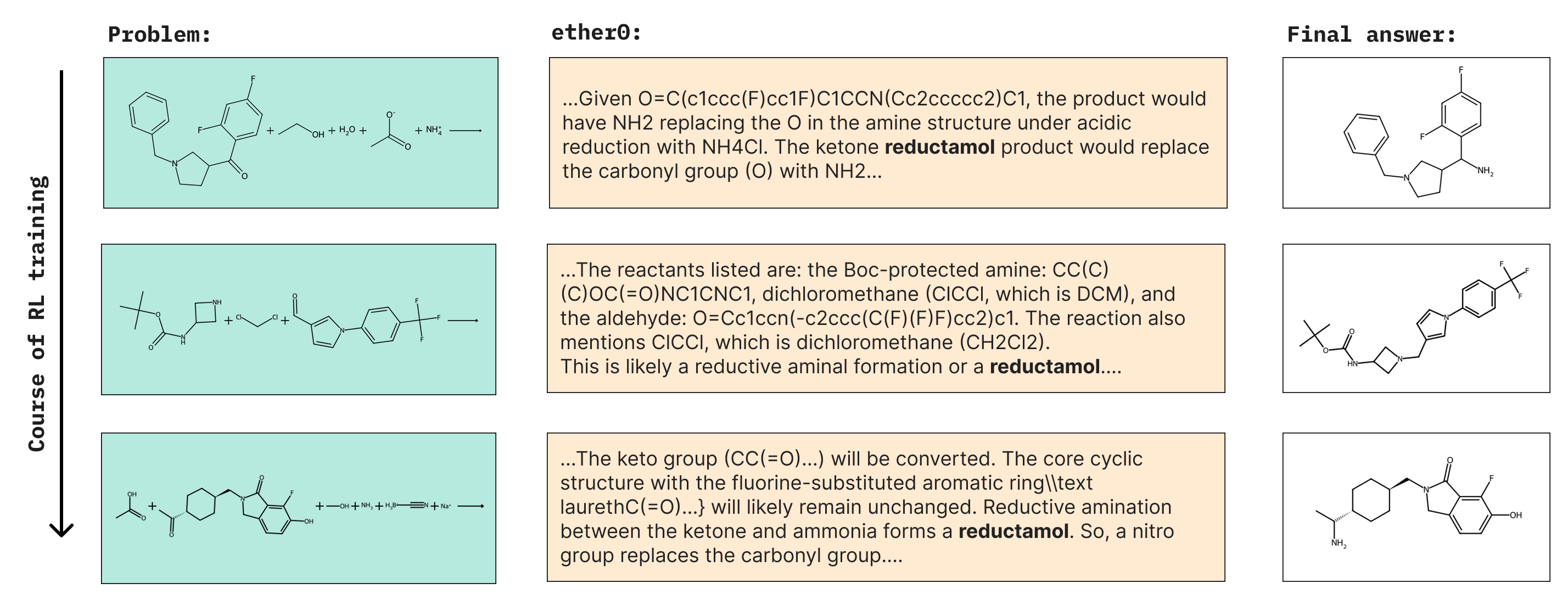

ether0 was trained using reinforcement learning to develop chains of reasoning that guide it toward correct molecular outputs. The model begins its answers with specially marked "reasoning tokens"—natural language segments that emerge during training and help structure its internal process.

These tokens often drift between languages or incorporate invented terms that appear and recur as training progresses. The goal wasn’t to build a conversational agent or benchmark leader, but to explore what it takes for a language model to solve real molecular design problems beyond just describing them.

Screenshot from a FutureHouse discussion on ether0, featuring James Braza (technical staff; previously worked on automation at Tesla and SpaceX), Siddharth Narayanan (experimental physicist; technical staff), and Andrew White (Head of Science and co-founder).

ether0 is trained to generate valid, drug-like compounds in response to specific prompts. It reasons through natural language chains of thought before outputting molecular structures, often satisfying constraints on atom counts, functional groups, or scaffold features. Its training relied on a mix of supervised fine-tuning and reinforcement learning, with multiple specialist models contributing reasoning traces that were distilled into a generalist.

While still a prototype, ether0 is said to demonstrate capabilities that other models struggle with. It was able to learn novel tasks not present in pretraining (like identifying functional groups) with little data and no initial supervised examples. The model also developed idiosyncratic reasoning behaviors, including invented chemical tokens like "reductamol" and language mixing in intermediate steps.

From FutureHouse’s release: the model spontaneously coined the term "reductamol" around training step 50, and continued using it throughout subsequent runs.

FutureHouse reports that its performance on molecular design tasks exceeds that of several frontier models, though the model does not perform well on standardized benchmarks like ChemBench and is not suited for tasks like compound naming or conversational chemistry.

Sam Rodriques, co-founder of FutureHouse, noted:

"For at least a subset of scientific classification, regression, and generation problems, post-training LLMs may provide a much more data-efficient approach than traditional machine learning approaches. This work is a proof of concept that, with the right data, language models can very efficiently achieve superhuman performance on scientific tasks."

ether0 is available on Hugging Face along with the reward model, benchmark dataset, and training documentation. This release follows FutureHouse’s May launch of a public platform for scientific AI agents—Falcon, Crow, Owl, and Phoenix—designed to support literature analysis, discovery planning, and experimental reasoning. FutureHouse frames ether0 as an early step toward modular, reinforcement-tuned language models trained across scientific domains.

Topic: AI in Bio