Salt AI Raises $10M for Drag-and-Drop Platform to Build AI Workflows in Life Sciences

Salt AI closed a $10 million round led by Morpheus Ventures, with Struck Capital, Marbruck Investments, and CoreWeave participating. The company plans to expand deployments with biopharma and healthcare customers and scale AI engineering. The platform is a visual-first, code-capable environment for assembling multi-model AI workflows across drug discovery, clinical development, revenue cycle management, intelligent data navigation, and enterprise operations.

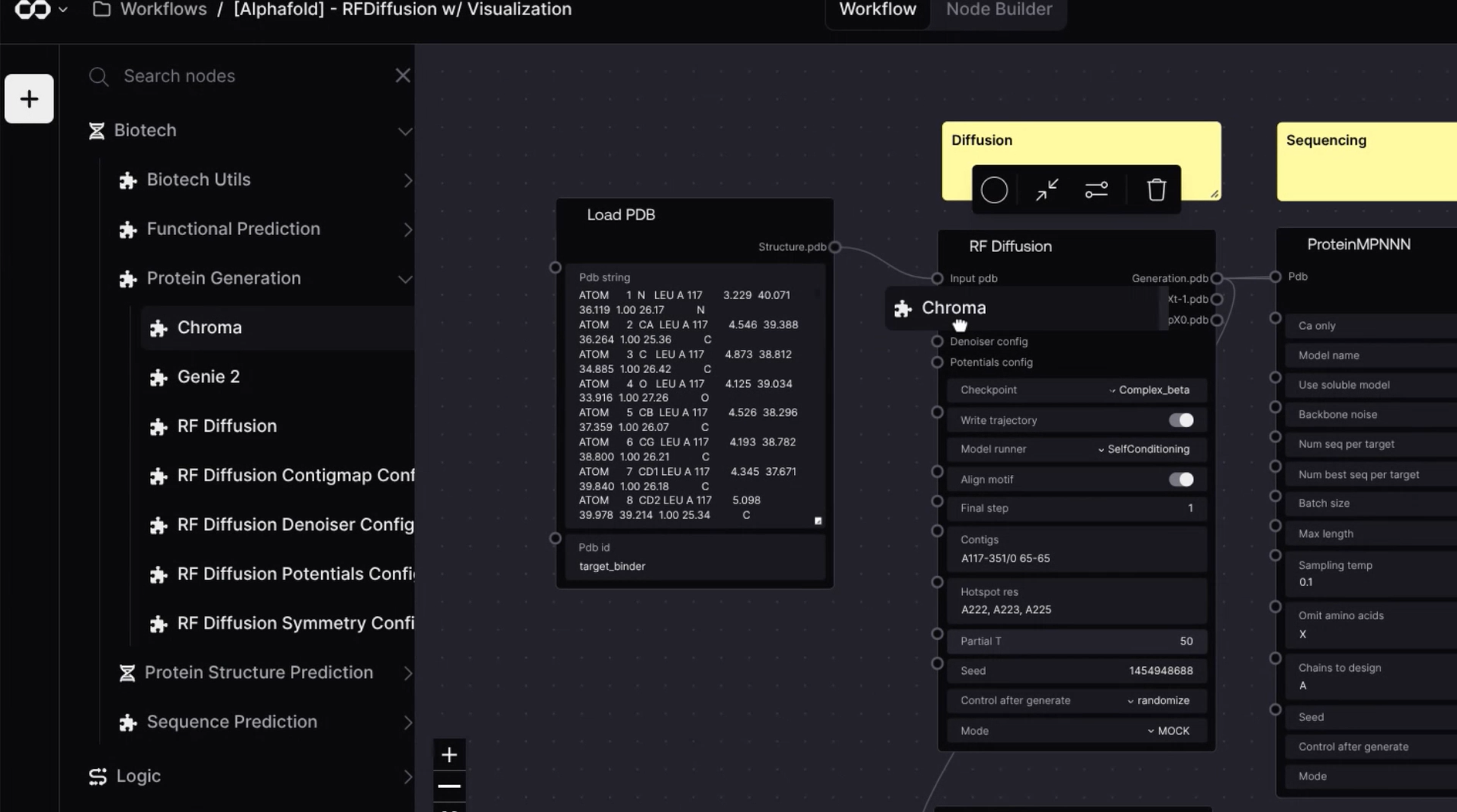

Salt positions its stack around two distinct components: a compute-ready context layer that captures workflow graphs, prompts, and data links as a versionable JSON artifact; and the Salt Matrix, a catalogue of sector-specific connectors, models, and solutions used to rapidly assemble workflows in a drag-and-drop IDE. The system supports native integrations with general LLMs, life-science and clinical models, ELN/LIMS and EHR systems, public data repositories, and web APIs. CoreWeave participates as an investor and AI cloud platform provider used by Salt to run complex model workloads.

Salt's visual IDE

Teams drag-and-drop steps (nodes) that call different models (general LLMs and domain models), connect them to ELNs/LIMS/EHRs or public datasets, set prompts and parameters, and run the workflow end-to-end. The platform saves that setup (workflow graph, prompts, and data links) as a versioned JSON object so results are reproducible and can be compared and audited. Users can hot-swap models without rewriting the workflow, track runs, compare outputs, and then deploy the pipeline as an API/chat tool/web app.

"Salt Matrix" is the catalog of connectors, models, and ready-made pieces the user assembles. The "compute-ready context layer" is the part that packages prompts and workflow logic so models follow the same playbook every time. According to the company, it autoscale runs across CPU/GPU, vectorizes data at the workflow level for retrieval, and supports SOC 2 and HIPAA compliance with 21 CFR Part 11-ready workflows. Deployments can be private cloud, on-prem, VPC, or air-gapped.

Compliance and deployment specifics additionally include traceable retrieval with audit trails and named deployment modes spanning Lightweight (single-machine), Scalable (multi-node), and Enterprise (high-availability autoscaling), with options to leverage existing GPUs or HPC clusters.

An example from the Ellison Medical Institute describes use of Salt AI since summer 2024 to accelerate computational biology workloads—designing and analyzing thousands of compounds—with two protein candidates advanced to in-depth wet-lab studies following positive in vitro results as of August 2025. A separate case study details a live hit-identification pipeline chaining nine models (including a split-optimized AlphaFold2 reported at 22x faster) paired with interactive QC protein visualizations for biochemists; the result is validated computational protein hits with implementation time reduced to weeks.

Salt’s stated thesis is that in a multi-model environment of growing data and "AI swarms," context acts as a compute layer; the platform is designed to make that context programmable, portable, and fast.

Topic: AI in Bio